|

| First City Tower (Houston), designed by Morris Aubry Architects |

Synopsis

The question of communication between humans and machine is

complex and multilayered. It bridges linguistics, computer science, cognition

and design. This is the question this research tries to address.

For too long has software development been

branded as a finite discipline. We are taught to think of a computer system as

a tidy problem–or rather a tidy solution–that can exist within clear boundaries

of planning, funded by the merit of its utility, and carry on as long as it is

interesting to our users.

New technologies are capable of multilayered

computation, through neural–nets and deep learning. Tools are now able to

understand–or at least rephrase–messages they’re given. The type of brute

force, one-dimensional computation we have been doing, is not only limiting

innovation, but also distracting from the real potential that is blocked not by

technology, but by our own mental models.

This thread starts in the earliest days of

computing, in 1948 when Claude Shannon published his seminal paper A Mathematical Theory of Communication, establishing the thereafter held fact

that everything–all information, in all of its forms–can be broken into 1’s and

0’s. All logical flows, questions and answers and software systems and

otherwise decision trees were born from this revelation. Prior to Shannon’s

work machines were analog, semantic to the natural phenomena they referenced.

Before the information age machines were a convenient packaging of a

nature into a useful form. Tools were analogues to nature, and not abstracted

from it.

An analog clock is analogous to the movement of

the sun (or might even use it)–but a digital one simply sequences

information. An analog camera captures light, digital one capture bits.

Telephone line moves voice, the internet moves data.

What Shannon’s work highlights is the flexibility

of abstraction. If before tools were designed based on the information they

were carrying, the new binary norm lets us ignore that and know that whatever

we want to say–no matter how long, in what language and whether it carries

sense–could be communicated in bits.

This is a pivotal point in the science of tools.

It gave us scale–we could have never had the internet in its current form

without the foundation of information abstraction.

The next step was the creation of systems. In the

late 70's Alan Kay was working on human–first tools at Xerox Parc. He was keen

on releasing systems that are user-friendly and intuitive to use. The problem

was that at the time there was no server architecture that could connect data

to users in an intuitive way. To that end Kay brought in Trygve Reenskaug, who

together with Adele Goldberg, came up with the first version of Model View

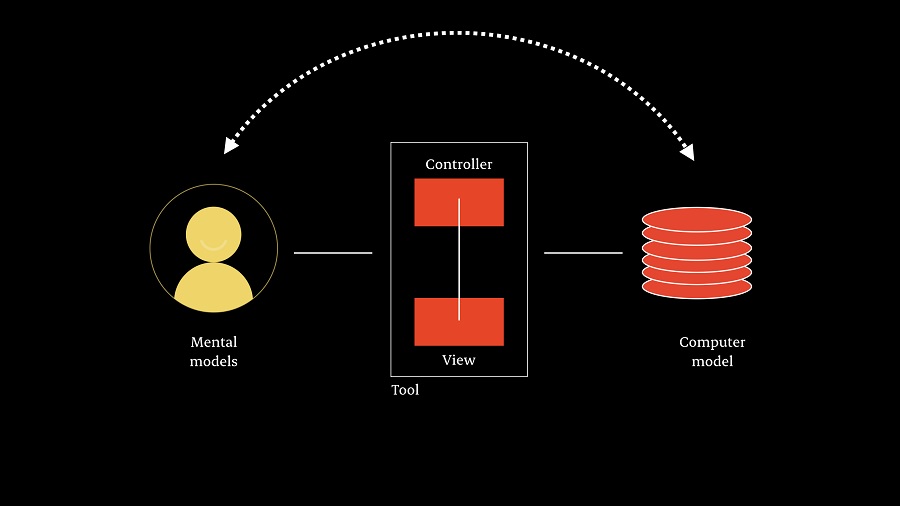

Controller.

|

| Reconstruction of the original version of Model View Controller |

A simple, and highly flexible mean of connecting

large quantities of data with users, in an intuitive way. The core principle

was powerfully simple–map human mental models to computational behavior. That

core idea single-handedly set the foundation for the field user interface (UI)

and the technological framework for graphic user interfaces (GUI).

As time progressed–and especially once the

internet opened up–MVC became flatter and more industrialized. In its

original version MVC is a triangular relationship between a human, a machine

and a tool. Keeping the tool (and any monetization of it) away from users, or

data. In the new industrialist version it made more sense to guard the data,

especially for its monetization potential, and offering the utility more as a

mean to contain users within the boundaries of your system (more on this idea

in Gated Products).

|

| Modern, flatter version of Model View Controller |

Through the joint work of Shannon and Reenskaug

we have written a status quo of extreme abstraction and rigid efficiency. The

meaning of information is irrelevant, all data can be reduced to 1’s and 0’s.

Once reduction has taken place we can store our data in stationary databases,

serve it through a proprietary interface point, anchor user behavior through a

set of utilities and guard the system from any type of interoperability.

In Information and the Modern Corporation (MIT Press) by James W Cortada makes

the crucial distinction that industrialist thinking taught us to forget.

First, we have data, then we can hopefully deduce

some information from it, and only later it becomes knowledge.

Data, information, knowledge, and wisdom all are

needed by people to do their work and live their lives.

Data are facts, such as names or number. If

sensors are collecting these, there are electronic impulses when something

happens when something moves.

Information is slightly different in that it

combines various data to say something that the data alone can’t say. For

instance, data on our spending habits tell us about our financial behavior and

about our patterns of expenditures–that is information, not just groups of

unrelated numbers.

Knowledge is more complicated than data or

information because it combines data, information and experiences from

logically connected groups of facts (such as budget data from a department)

with things that have no direction or obvious connection (such as previous jobs

and experiences).

Back to Shannon’s reductionist approach and

Reenskaug’s assembly line. Are we moving data, information, knowledge or

wisdom? Traditionally (in the last 70 years) we used to decode human knowledge

into organized information models and then scatter it as data across

communication channels only to later have that encoded back into tabular

information and cognitively processed into knowledge.

As our machines are starting to be able to

semantically understand information (speaking to Alexa is one example), new

questions will need to be answered. How much decoding do we need to do when

using a system? Do we really need to go to a terminal (phone, or laptop) and

fit things in boxes for the machine to work? Is there a way for the machine to

come closer to the way we exchange knowledge and wisdom? and What can be

done to ease the encoding on the human recipient side? What form should

information be in when we receive it back from a machine?

The question of communication between humans and

machine is complex and multilayered. It bridges linguistics, computer science,

cognition and design. This is the question this research tries to address.

Some

thoughts about language in conversational systems. Part of an ARB Major Seed

Grant, from McMaster University (Canada), titled Language Architecture as a

Model of Human-centered Artificial Intelligences (PI: Ivona Kučerová,

Collaborators: Nitzan Hermon & Ida Toivonen).

Nitzan Hermon is a

designer and researcher of AI, human-machine augmentation and language. Through

his writing, academic and industry work he is writing a new, sober narrative in

the collaboration between humans and machines.

Originally published on EVERYTHING WILL HAPPEN and THE CREATIVITY POST

No comments :

Post a Comment